Introduction

This blog is a three-part series. See part 1 for retrieving the dataset and part 3 (upcoming) for visualization.

In big and old legacy systems, tests are often a mess. Especially end-to-end-tests with UI testing frameworks like Selenium quickly become a PITA aka unmaintainable. They are running slow and you quickly get overwhelmed by plenty of tests that do partly the same, too.

In this data analysis, I want to illustrate a way that can take us out of this misery. We want to spot test cases that are structurally very similar and thus can be seen as duplicate. We’ll calculate the similarity between tests based on their invocations of production code. We can achieve this by treating our software data as observations of linear features. This opens up ways for us to leverage existing mathematically techniques like vector distance calculation (as we’ll see in this post) as well as machine learning techniques like multidimensional scaling or clustering (in a follow-up post).

As software data under analysis, we’ll use the JUnit tests of a Java application for demonstrating the approach. We want to figure out, if there are any test cases that test production code where other, more dedicated tests are already testing as well. With the result, we could be able to delete some superfluous test cases (and always remember: less code is good code, no code is best :-)).

Reality check

The real use case originates from a software system with a massive amount of Selenium end-to-end-tests that uses the Page Object pattern. Each page object represents one HTML site of a web application. Technically, a page object exposes methods in the programming language you use that enables the interaction with websites programmatically. In such a scenario, you can infer which tests are calling the same websites and are triggering the same set of UI components (like buttons). This is a good estimator for test cases that test the same use cases in the application. We can use the results of such an analysis to find repeating test scenarios.

Dataset

I’m using a dataset that I’ve created in a previous blog post with jQAssistant. It shows which test methods call which code in the application (the “production code”). It’s a pure static and structural view of our code, but can be very helpful as we’ll see shortly.

Note: There are also other ways to get these kinds of information e. g. by mining the log file of a test execution (this would even add real runtime information as well). But for the demonstration of the general approach, the pure static and structural information between the test code and our production code is sufficient.

First, we read in the data with Pandas – my favorite data analysis framework for getting things easily done.

import pandas as pd

invocations = pd.read_csv("datasets/test_code_invocations.csv", sep=";")

invocations.head()

What we’ve got here are

- all names of our test types (

test_type) and production types (prod_type) - the signatures of the test methods (

test_method) and production methods (prod_method) - the number of calls from the test methods to the production methods (

invocations).

Analysis

OK, let’s do some actual work! We want

- to calculate the structural similarity of test cases

- to spot possible duplications of tests

to figure out which test cases are superfluous (and can be deleted).

What we have are all tests cases (aka test methods) and their calls to the production code base (= the production methods). We can transform this data to a matrix representation that shows which test method triggers which production method by using Pandas’ pivot_table function on our invocations DataFrame.

invocation_matrix = invocations.pivot_table(

index=['test_type', 'test_method'],

columns=['prod_type', 'prod_method'],

values='invocations',

fill_value=0

)

# show interesting parts of results

invocation_matrix.iloc[4:8,4:6]

What we’ve got now is the information for each invocation (or non-invocation) of test methods to production methods. In mathematical words, we’ve now got an n-dimensional vector for each test method where n is the number of tested production methods in our code base. That means we’ve just transformed our software data to a representation so that we can work with standard Data Science tooling :-D! That means all further problem-solving techniques in this area can be reused by us.

And this is exactly what we do now in our further analysis: We’ve reduced our problem to a distance calculation between vectors (we use distance instead of similarity because later used visualization techniques work with distances). For this, we can use the cosine_distances function (see this article for the mathematical background) of the machine learning library scikit-learn to calculate a pair-wise distance matrix between the test methods aka linear features.

from sklearn.metrics.pairwise import cosine_distances

distance_matrix = cosine_distances(invocation_matrix)

# show some interesting parts of results

distance_matrix[81:85,60:62]

From this result, we create a DataFrame to get a better visual representation of the data.

distance_df = pd.DataFrame(distance_matrix, index=invocation_matrix.index, columns=invocation_matrix.index)

# show some interesting parts of results

distance_df.iloc[81:85,60:62]

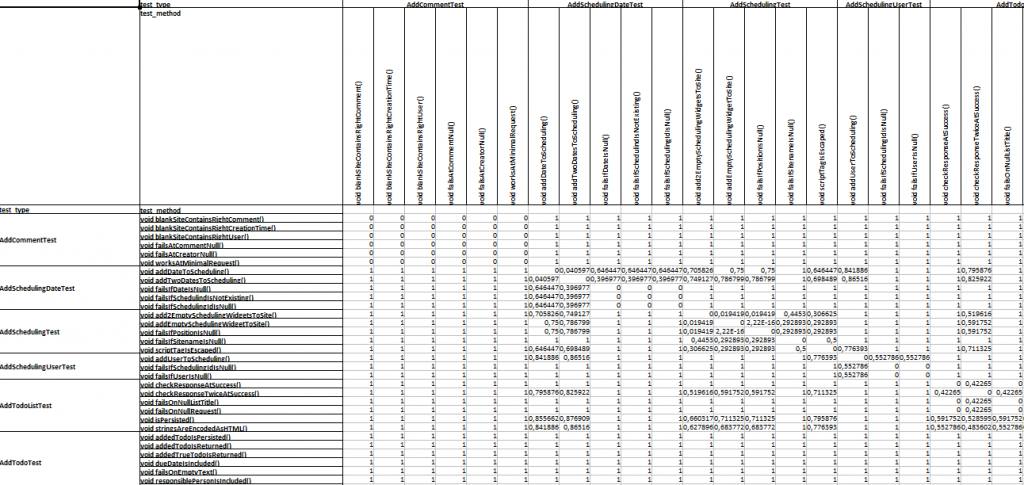

You find the complete DataFrame as Excel file as well (~0.5 MB). It shows all dissimilarities between test cases based on the static calls to production code and looks something like this:

Can you already spot some clusters? We’ll have a detailed look at that in the next blog post ;-)!

Discussion

Let’s have a look at what we’ve achieved by discussing some of the results. We compare the actual source code of the test method readRoundtripWorksWithFullData() from the test class CommentGatewayTest

@Test

public void readRoundtripWorksWithFullData() {

createDefaultComment();

assertEquals(1, commentGateway.read(SITE_NAME).size());

checkDefaultComment(commentGateway.read(SITE_NAME).get(0));

}

with the test method postCommentActuallyCreatesComment() of the another test class CommentsResourceTest

@Test

public void postCommentActuallyCreatesComment() {

this.client.path("sites/sitewith3comments/comments").accept(...

Assert.assertEquals(4L, (long)this.commentGateway.read("sitewith3comments").size());

Assert.assertEquals("comment3", ((Comment)this.commentGateway.read("sitewith3comments").get(3)).getContent());

}

Albeit both classes represent different test levels (unit vs. integration test), they share some similarities (with ~0.1 dissimilarity aka ~90% similar calls to production methods). We can see exactly which invoked production methods are part of both test cases by filtering out the methods in the original invocations DataFrame.

invocations[

(invocations.test_method == "void readRoundtripWorksWithFullData()") |

(invocations.test_method == "void postCommentActuallyCreatesComment()")]

We see that both test methods share calls to the production method read(...), but differ in the call of the method with the name getContent() in the class Comment, because only the test method postCommentActuallyCreatesComment() of CommentsResourceTest invokes it.

We can repeat this discussion for another method named postTwiceCreatesTwoElements() in the test class CommentsResourceTest:

public void postTwiceCreatesTwoElements() {

this.client.path("sites/sitewith3comments/comments").accept(...

this.client.path("sites/sitewith3comments/comments").accept(...

Assert.assertEquals(5L, (long)comments.size());

Assert.assertEquals("comment1", ((Comment)comments.get(0)).getContent());

Assert.assertEquals("comment2", ((Comment)comments.get(1)).getContent());

Assert.assertEquals("comment3", ((Comment)comments.get(2)).getContent());

Assert.assertEquals("comment4", ((Comment)comments.get(3)).getContent());

Assert.assertEquals("comment5", ((Comment)comments.get(4)).getContent());

Albeit the test method is a little bit awkward (with all those subsequent getContent() calls), we can see a slight slimilarity of ~20%. Here are details on the production method calls as well:

invocations[

(invocations.test_method == "void readRoundtripWorksWithFullData()") |

(invocations.test_method == "void postTwiceCreatesTwoElements()")]

Both test classes invoke the read(...) method, but only postTwiceCreatesTwoElements() calls getContent() – and this for five times. This explains the dissimilarity between both test methods.

In contrast, we can have a look at the method void keyWorks() from the test class ConfigurationFileTest, which has absolutely nothing to do (= dissimilarity 1.0) with the method readRoundtripWorksWithFullData() nor the underlying calls to the production code.

@Test

public void keyWorks() {

assertEquals("InMemory", config.get("gateway.type"));

}

Looking at the corresponding invocation data, we see, that there are no common uses of production methods.

invocations[

(invocations.test_method == "void readRoundtripWorksWithFullData()") |

(invocations.test_method == "void keyWorks()")]

Conclusion

We’ve calculated the structural distances between test cases depending on the invocations to production methods. We’ve seen that we can simplify a question about our complex software data to a question that can be answered by standard Data Science techniques.

In the next blog post, we’ll have a deeper look into how we can get some insights into the cohesion of all test classes. We’ll use our distance matrix to visualize and cluster the data by using some simple machine learning techniques.

I hope I could illustrate how the (dis-)similarity calculation of test cases works behind the scenes. If there are any questions or shortcomings I’ve made in my analysis: Please let me know!

You can find this blog post as Jupyter notebook on GitHub.

Pingback:Finding tested code with jQAssistant – feststelltaste

Pingback:Visualizing and Clustering of the Structural Similarities of Test Cases – feststelltaste

Pingback:Checking the modularization of software systems by analyzing co-changing source code files – feststelltaste